Hello, I'm Aaptha Boggaram!

Skilled in Software Development, Machine Learning, Web & App Development, and AI-powered Systems.

Building intelligent, efficient, and scalable solutions at the intersection of AI, Web Technologies, and Robotics, I love integrating machine learning into web and mobile applications to create seamless, cutting-edge user experiences. Whether it's developing full-stack applications, leveraging AI for automation, or working with embedded systems and robotics, I'm always exploring innovative ways to blend AI with software engineering.

Tech I use (but not limited to)

Work Experience

-

Graduate Teaching Assistant at University of Colorado Boulder

Aug 2024 - May 2025

- Conducted weekly coding labs on R programming concepts and data analysis for 50+ students and developed automated grading scripts in Python with test cases to streamline assignment evaluation.

-

Software Engineering Intern at The Kroger Co.

May 2024 - Aug 2024

- Developed software independently to automate the creation of product variant groups, leveraging Large Language Models (LLMs) and Generative AI (GenAI) for efficient product information categorization, making it accessible to over 11 million Kroger users.

- Designed and implemented major database changes for an upcoming release and worked closely with 84.51˚ to integrate the changes into a workflow to automate manual processes carried out through the merchant portal on Kroger’s web application.

- Worked in an agile team, contributing to UI enhancements for Kroger’s web application using React with Typescript, and made improvements on backend features using Golang.

- Debugged issues to ensure software stability, managed minor tasks to support project efficiency, and updated documentation.

-

Graduate Teaching Assistant at University of Colorado Boulder

Jan 2024 - May 2024

- Executed diverse teaching responsibilities as a Teaching Assistant for an Introductory C++ course (CSCI1300), including conducting recitations, crafting C++ assignments, grading, and formulating innovative projects for over 900 students.

-

Software Intern at Continental AG

Jan 2023 - July 2023

- Demonstrated “Road Type Classification” and “Road Curve Estimation” using Continental’s Advanced Radar Sensors as a R&D core developer and published a research article in the IEEE CONECCT 2024 conference.

- Developed novel algorithms and achieved an 18% and 25% increase in performance from Continental’s present work, respectively.

- Processed radar data to create 5+ accurate and reliable datasets for analysis and modeling.

-

Research Assistant at Centre for Heterogeneous and Intelligent Processing Systems

May 2021 - Dec 2021

- Designed and tested a lightweight (~8 MB) machine learning model with reinforcement learning for cardiac anomaly detection on wearable devices, achieving benchmark accuracy on cutting-edge microprocessors.

- Research Group Link

-

Summer Intern at LivNSense Technologies

June 2021 - Sept 2021

- Implemented and deployed a real-time pothole detection framework on an Arduino Nano BLE Sense, reducing AI model size by 94.5% through quantization while maintaining high accuracy.

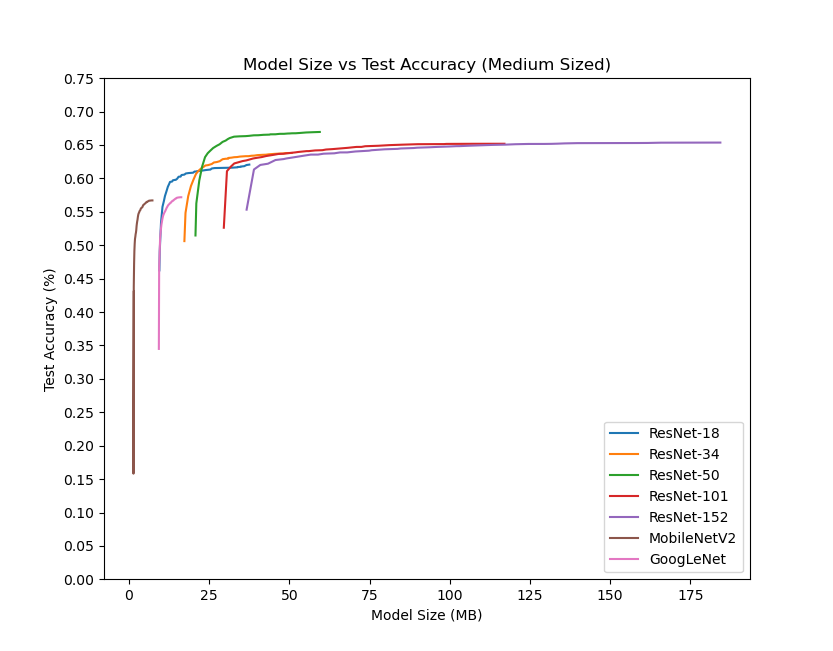

DotDNN: A Block-Grained Scaling Framework for Identifying the Best DNNs for Edge Vision

Tech stack: Python, CUDA, Docker, Pytorch,

Developed a dynamic, block-grained scaling system enabling adaptive DNN selection and deployment on edge devices while maintaining optimal accuracy-latency trade-offs under fluctuating compute and memory constraints.

Project Link

Offline Reinforcement Learning for Cardiac Pacemakers

Tech stack: Python, Docker, Tensorflow/Pytorch, WFDB

Project done in collaboration with CU Anschutz. Developed a state-of-the-art offline reinforcement learning model, utilizing historical patient data from Medtronic pacemakers to optimize pacemaker parameters for improved cardiac rhythm pacing.

Project Link

Cruella: Autonomous Vehicle Navigation System

Tech stack: Robot Operating System, Python, C++, CMake, AWS, Embedded C

Developed an autonomous vehicle controller using ROS2, implementing LIDAR-based SLAM for visual navigation, reverse parking, obstacle avoidance, and stop sign detection, recognized as one of the fastest teams to navigate a closed indoor course in under 2 mintes.

Project Link

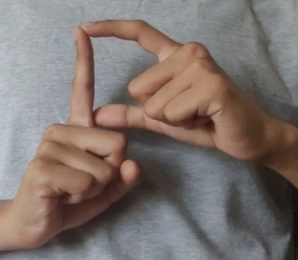

RealSign: Real-time bidirectional Indian Sign Language Translator

Tech stack: Python, Pytorch, Keras, Flask, Tensorflow

Led a team of 4 to develop an app for real-time bidirectional Indian Sign Language translation, training a Siamese Neural Network on a self-collated dataset of 34,000+ images, now deployed as one of the largest open-source ISL datasets.

Project LinkMy Publications

Km-IFC: A Framework for Road Course Estimation Using Radar Detections

Km-IFC is a novel framework that estimates road courses using radar detections. It combines K-means clustering, Isolation Forest, and least squares method to accurately match vehicle-driven paths with an average difference of 0.365 meters and a confidence coefficient of 0.88. This efficient, self-contained solution processes raw sensor data without relying on external outputs.

RtTSLC: A Framework for Real-time Two-handed Sign Language Translation

The RtTSLC framework for real-time translation of Indian Sign Language (ISL) finger-spelled English alphabet gestures, employing VGG16, EfficientNet, and AlexNet architectures, achieved high accuracy rates (92%, 99%, and 89% respectively), demonstrating its potential to aid the hard-of-hearing community.

Sign Language Translation Systems: A Systematic Literature Review

Conducted a systematic literature review on sign language translation systems, identifying the state-of-the-art techniques, challenges, and future research directions in the field.

Cardiac Anomaly Detection for Wearable Devices

Developed a deep learning model to detect cardiac anomalies in ECG signals, achieving an accuracy of 99.5% and a sensitivity of 99.6% on the MIT-BIH Arrhythmia Database. The model was deployed on a wearable device to provide real-time feedback to users.